istio+flagger实现金丝雀部署

flagger 介绍

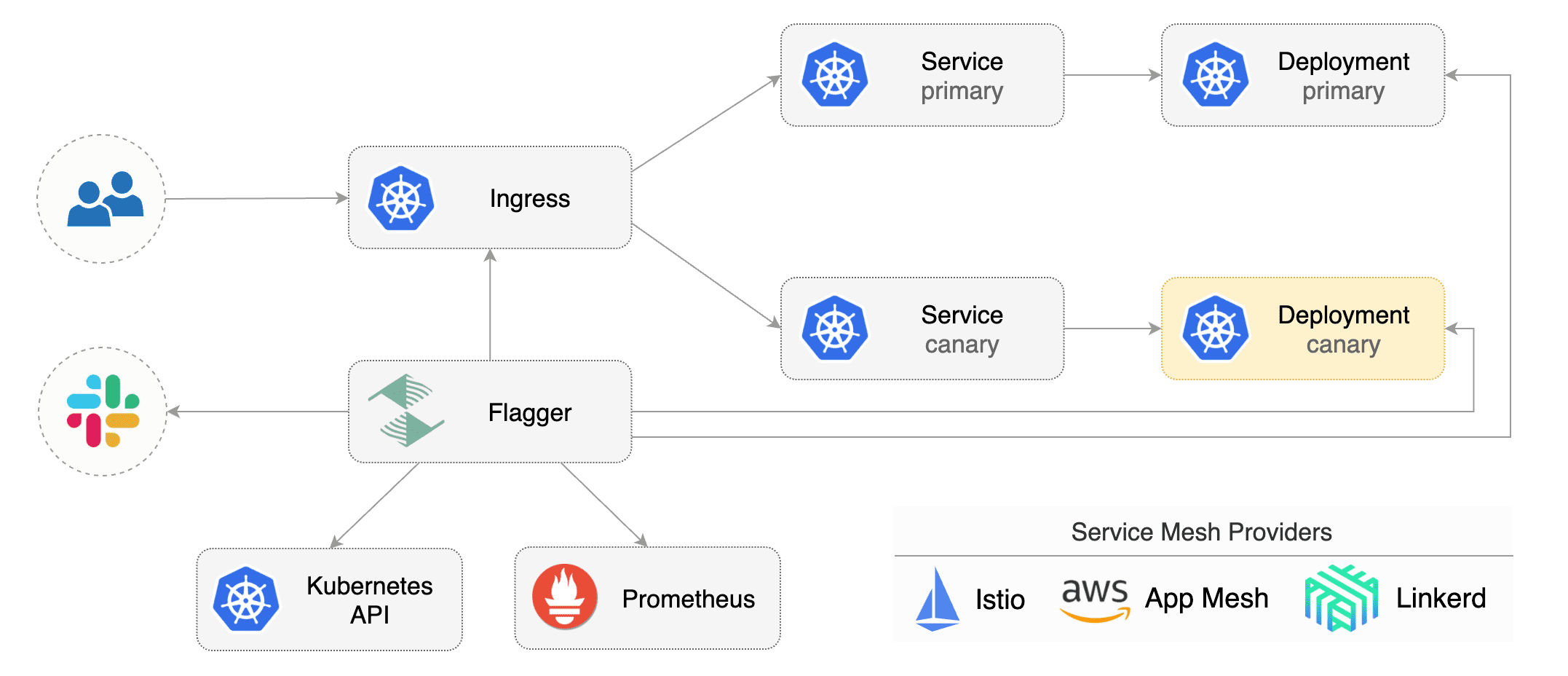

Flagger是Kubernetes的渐进交付operator

flagger是一个Kubernetes操作者能够自动促进使用金丝雀部署Istio,Linkerd,应用网格,NGINX,船长,轮廓或GLOO路由流量移位和普罗米修斯度量金丝雀分析。canary分析可以通过webhooks进行扩展,以运行系统集成/验收测试,负载测试或任何其他自定义验证。

Flagger实现了一个控制环路,该环路逐渐将流量转移到金丝雀,同时测量关键性能指标,例如HTTP请求成功率,请求平均持续时间和Pod运行状况。基于对KPI的分析,金丝雀会被提升或中止,并将分析结果发布给Slack或MS Teams。

Flagger可以使用Kubernetes自定义资源进行配置,并且与为Kubernetes制作的任何CI/CD解决方案兼容。由于Flagger是声明性的,并且对Kubernetes事件做出反应,因此它可以与Flux CD或JenkinsX一起用于GitOps管道中。

部署

添加flagger helm仓库

helm repo add flagger https://flagger.app

安装flagger canary crd

kubectl apply -f https://raw.githubusercontent.com/weaveworks/flagger/master/artifacts/flagger/crd.yaml

为istio部署flagger

helm upgrade -i flagger flagger/flagger --namespace=istio-system --set crd.create=false --set meshProvider=istio --set metricsServer=http://prometheus:9090

请注意,Flagger取决于Istio遥测和Prometheus,如果您要使用istioctl安装Istio,则应使用默认配置文件。

部署grfana

helm upgrade -i flagger-grafana flagger/grafana --namespace=istio-system --set url=http://prometheus.istio-system:9090 --set user=admin --set password=change-me

为示例程序创建istio gateway

apiVersion: networking.istio.io/v1alpha3 kind: Gateway metadata: name: public-gateway namespace: istio-system spec: selector: istio: ingressgateway servers:

port:

number: 80

name: http

protocol: HTTP

hosts:

"*"

初始化

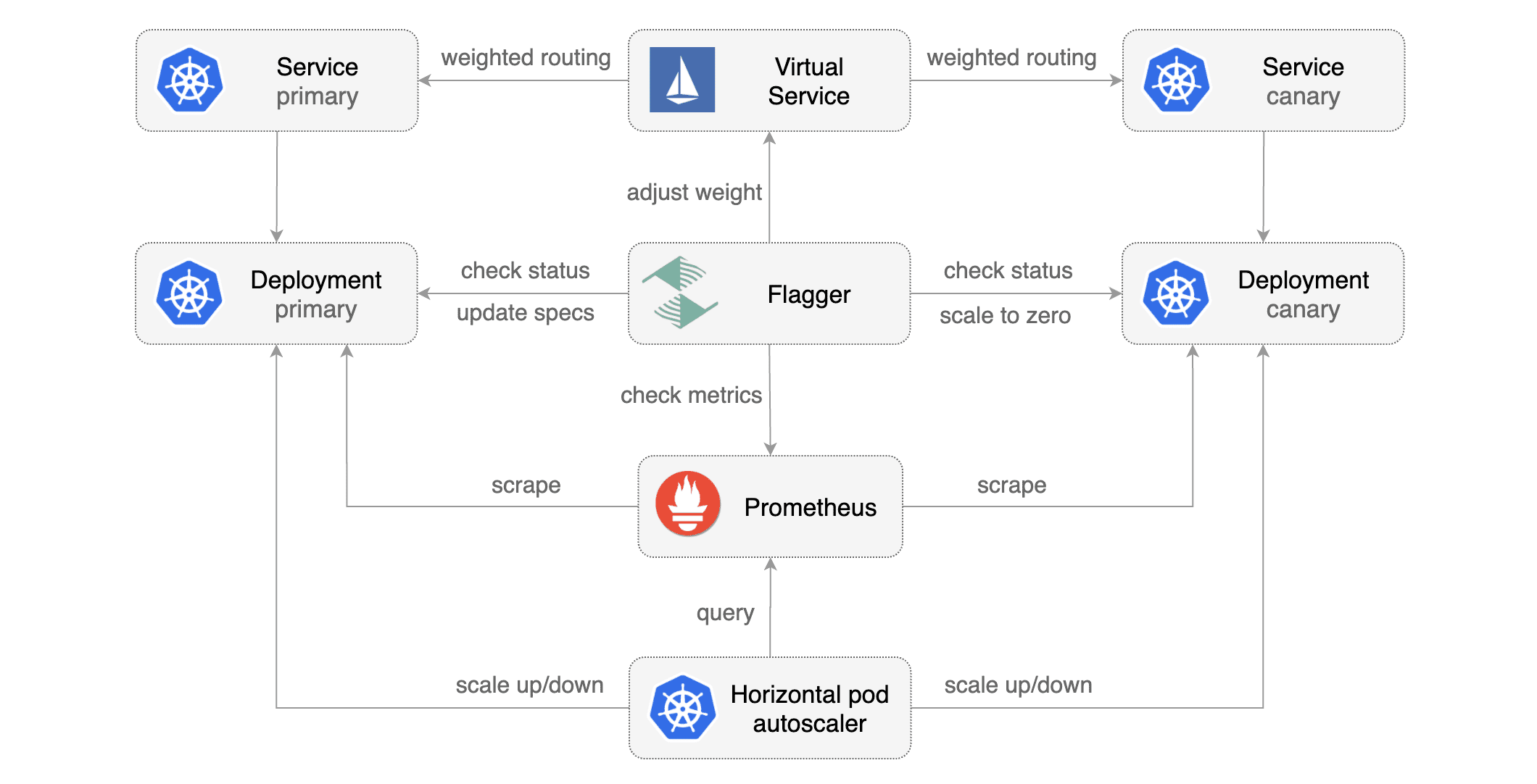

Flagger进行Kubernetes部署以及可选的水平Pod自动缩放器(HPA),然后创建一系列对象(Kubernetes部署,ClusterIP服务,Istio目标规则和虚拟服务).这些对象将应用程序暴露在网格内,并推动金丝雀的分析和提升。 创建一个启用Istio sidecar注入的测试名称空间:

kubectl create ns test kubectl label namespace test istio-injection=enabled

创建deployment和hpa

kubectl apply -k github.com/weaveworks/flagger//kustomize/podinfo

创建load testing服务用语在金丝月部署时产生流量

kubectl apply -k github.com/weaveworks/flagger//kustomize/tester

创建CR

apiVersion: flagger.app/v1beta1 kind: Canary metadata: name: podinfo namespace: test spec:

deployment reference

targetRef: apiVersion: apps/v1 kind: Deployment name: podinfo

the maximum time in seconds for the canary deployment

to make progress before it is rollback (default 600s)

progressDeadlineSeconds: 60

HPA reference (optional)

autoscalerRef: apiVersion: autoscaling/v2beta1 kind: HorizontalPodAutoscaler name: podinfo service:

analysis:

保存应用配置

kubectl apply -f ./podinfo-canary.yaml

当金丝雀分析开始时,Flagger在将流量路由到金丝雀之前将调用预部署webhooks.Canary分析将运行五分钟,同时每分钟验证HTTP指标和推出挂钩。

几秒钟后,Flagger将创建金丝雀对象

applied

deployment.apps/podinfo horizontalpodautoscaler.autoscaling/podinfo canary.flagger.app/podinfo

generated

deployment.apps/podinfo-primary horizontalpodautoscaler.autoscaling/podinfo-primary service/podinfo service/podinfo-canary service/podinfo-primary destinationrule.networking.istio.io/podinfo-canary destinationrule.networking.istio.io/podinfo-primary virtualservice.networking.istio.io/podinfo

自动金丝雀升级

更新镜像触发金丝雀升级

kubectl -n test set image deployment/podinfo podinfod=stefanprodan/podinfo:3.1.1

Flagger检测到部署修订已更改,并开始新的部署

kubectl -n test describe canary/podinfo

Status: Canary Weight: 0 Failed Checks: 0 Phase: Succeeded Events: Type Reason Age From Message

Normal Synced 3m flagger New revision detected podinfo.test Normal Synced 3m flagger Scaling up podinfo.test Warning Synced 3m flagger Waiting for podinfo.test rollout to finish: 0 of 1 updated replicas are available Normal Synced 3m flagger Advance podinfo.test canary weight 5 Normal Synced 3m flagger Advance podinfo.test canary weight 10 Normal Synced 3m flagger Advance podinfo.test canary weight 15 Normal Synced 2m flagger Advance podinfo.test canary weight 20 Normal Synced 2m flagger Advance podinfo.test canary weight 25 Normal Synced 1m flagger Advance podinfo.test canary weight 30 Normal Synced 1m flagger Advance podinfo.test canary weight 35 Normal Synced 55s flagger Advance podinfo.test canary weight 40 Normal Synced 45s flagger Advance podinfo.test canary weight 45 Normal Synced 35s flagger Advance podinfo.test canary weight 50 Normal Synced 25s flagger Copying podinfo.test template spec to podinfo-primary.test Warning Synced 15s flagger Waiting for podinfo-primary.test rollout to finish: 1 of 2 updated replicas are available Normal Synced 5s flagger Promotion completed! Scaling down podinfo.test

以下任何对象的更改都会触发金丝雀部署: 部署PodSpec(容器镜像,命令,端口,环境,资源等) ConfigMap作为卷安装或映射到环境变量 作为卷安装或映射到环境变量的机密 您可以使用以下方法监视所有金丝雀:

watch kubectl get canaries --all-namespaces

NAMESPACE NAME STATUS WEIGHT LASTTRANSITIONTIME test podinfo Progressing 15 2019-01-16T14:05:07Z prod frontend Succeeded 0 2019-01-15T16:15:07Z prod backend Failed 0 2019-01-14T17:05:07Z

自动回滚

在canary分析期间,您可能会生成HTTP 500错误和高延迟,以测试Flagger是否暂停推出。 触发另一个金丝雀部署:

kubectl -n test set image deployment/podinfo podinfod=stefanprodan/podinfo:3.1.2

进入到load tester pod执行命令

kubectl -n test exec -it flagger-loadtester-xx-xx sh

生成500错误

watch curl http://podinfo-canary:9898/status/500

生成延迟

watch curl http://podinfo-canary:9898/delay/1

当检查失败的数量达到Canary分析阈值时,流量将路由回主数据库,Canary缩放为零,并且首次推出将标记为已失败。

kubectl -n test describe canary/podinfo

Status: Canary Weight: 0 Failed Checks: 10 Phase: Failed Events: Type Reason Age From Message

Normal Synced 3m flagger Starting canary deployment for podinfo.test Normal Synced 3m flagger Advance podinfo.test canary weight 5 Normal Synced 3m flagger Advance podinfo.test canary weight 10 Normal Synced 3m flagger Advance podinfo.test canary weight 15 Normal Synced 3m flagger Halt podinfo.test advancement success rate 69.17% < 99% Normal Synced 2m flagger Halt podinfo.test advancement success rate 61.39% < 99% Normal Synced 2m flagger Halt podinfo.test advancement success rate 55.06% < 99% Normal Synced 2m flagger Halt podinfo.test advancement success rate 47.00% < 99% Normal Synced 2m flagger (combined from similar events): Halt podinfo.test advancement success rate 38.08% < 99% Warning Synced 1m flagger Rolling back podinfo.test failed checks threshold reached 10 Warning Synced 1m flagger Canary failed! Scaling down podinfo.test

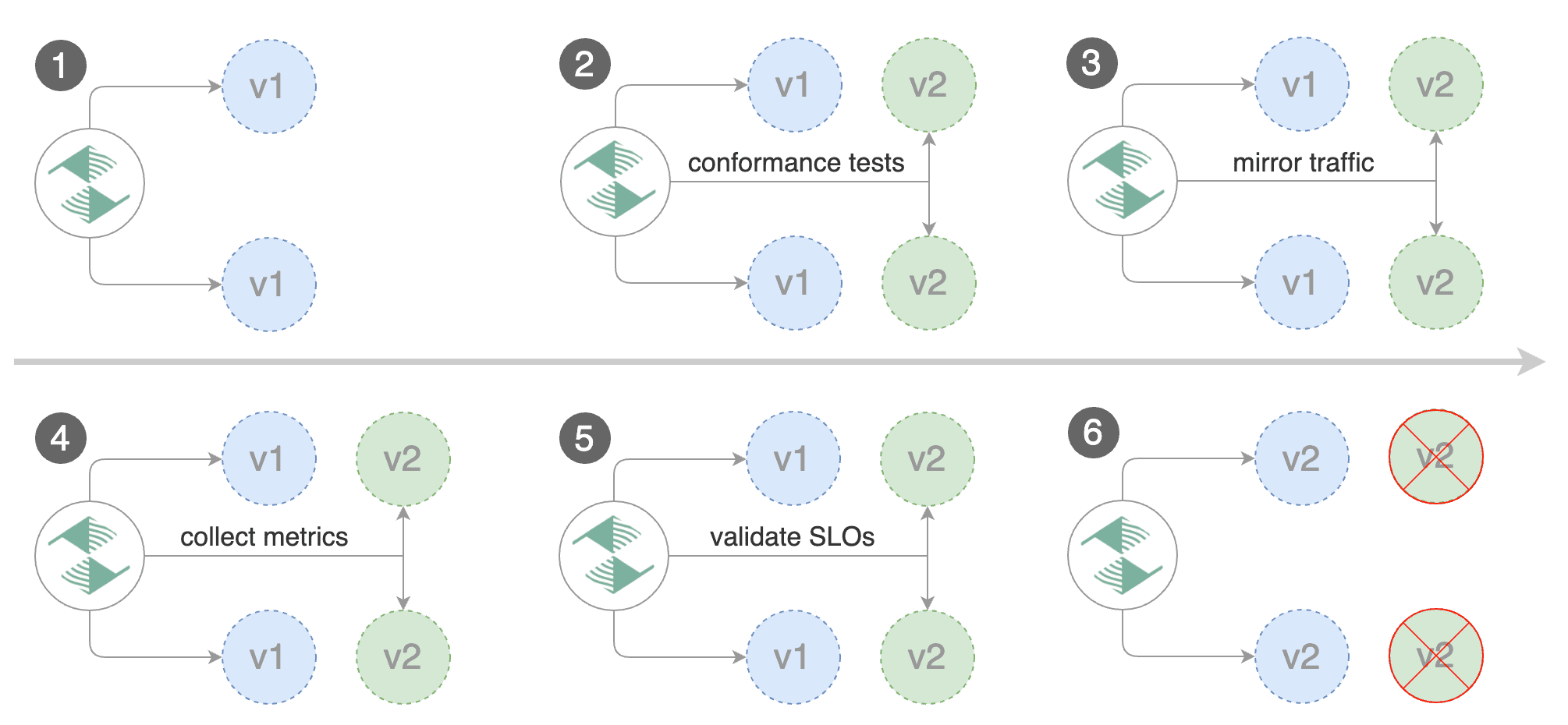

流量镜像

对于执行读取操作的应用程序,可以将Flagger配置为使用流量镜像来驱动金丝雀版本.Istio流量镜像将复制每个传入的请求,将一个请求发送到主要请求,将一个请求发送到canary服务.来自主数据库的响应被发送回用户,而来自金丝雀的响应被丢弃.在两个请求上都收集指标,因此只有在Canary指标在阈值内时,部署才会继续进行。 请注意,镜像应用于幂等或能够处理两次的请求(一次由主请求,一次由金丝雀进行处理)。 您可以通过以下方式启用镜像:将stepWeight/maxWeight替换为迭代,并将analysis.mirror设置为true:

apiVersion: flagger.app/v1beta1 kind: Canary metadata: name: podinfo namespace: test spec: analysis:

通过上述配置,Flagger将通过以下步骤运行canary版本:

检测新修订(部署规范,机密或配置映射更改)

从零扩展金丝雀部署

等待HPA设置金丝雀的最小副本

检查金丝雀pod健康

运行验收测试

如果测试失败,则中止金丝雀释放

开始负载测试

反映从主要到金丝雀的100%流量

每分钟检查一次请求成功率和请求持续时间

如果达到指标检查失败阈值,则中止金丝雀释放

达到迭代次数后停止流量镜像

将实时交通路由到金丝雀pod

提升金丝雀(更新主要机密,配置映射和部署规范)

等待主要部署部署完成

等待HPA设置主要的最小副本

检查主pod健康

将实时流量切换回主要流量

缩放到零金丝雀

发送带有金丝雀分析结果的通知

可以通过自定义指标检查,webhooks,手动升级批准以及Slack或MS Teams通知来扩展上述过程。

Last updated